News

The first presidential election of the generative AI era is only weeks away — and we’re not ready

The 2024 elections are the first US presidential elections of the generative AI era, and we’re already seeing examples of the technology being used to impact how Americans cast their ballots.

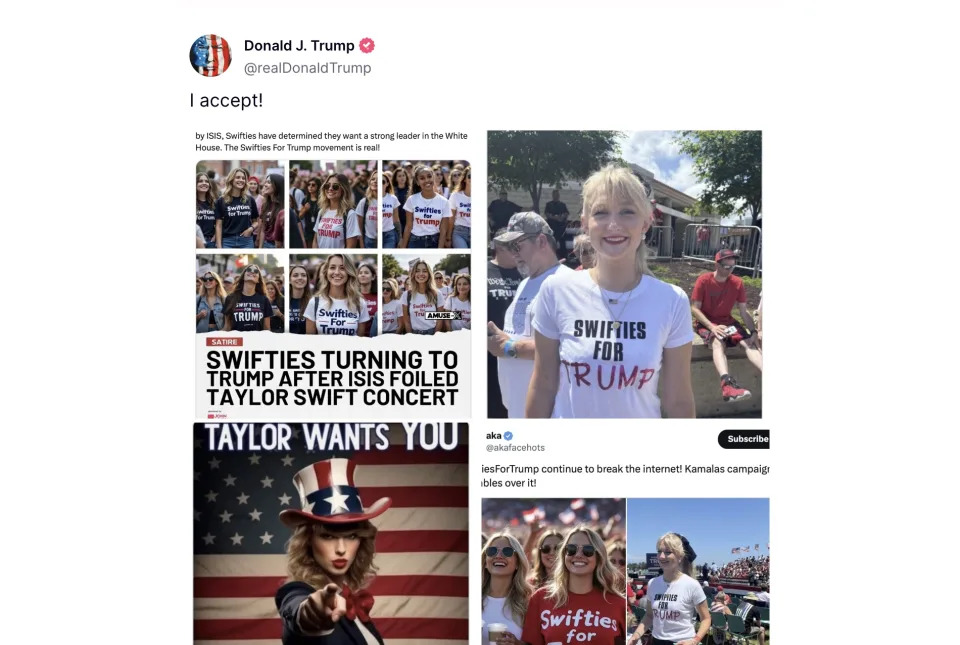

On Aug. 18, former President Donald Trump shared a series of AI-generated images of Taylor Swift fans wearing pro-Trump shirts, despite the fact that the photos originally appeared in a post marked as satire on X (formerly Twitter) . In January, deepfake phone calls went out to some New Hampshire residents, attempting to discourage them from participating in the state’s Democratic primary.

With the election just a few short months away and generative AI detection technologies hit-or-miss, experts say we can expect to see more generative AI-based content designed to sow discord among the electorate.

“The danger is that if there is a type of AI disinformation … like the Taylor Swift images … if millions of people are exposed to it and only 10% or 15% do not realize that that's fake, that could be a substantial number for thinking about elections,” explained Augusta University political science professor Lance Hunter.

“[In] swing states, sometimes the margin of victory is less than 1%. So … a small number of people being exposed to this disinformation and not realizing it’s disinformation could be influential for election outcomes,” Hunter added.

Generative AI is already easy to use and easily accessible

Generative AI can be used to do more than create chatbots that answer your most inane questions. It can be used to produce images, recorded and live videos, and audio. And the incredibly fast proliferation of the technology means that people around the world can take advantage of it, including individuals and organizations seeking to use it for malicious purposes.

In fact, it’s already happened in countries including India, Indonesia, and South Korea, though it’s unclear whether they influenced voter outcomes . Still, there’s a real risk that phony videos or audio depicting either Trump or Vice President Kamala Harris saying or doing something they never did could go viral and influence votes.

The Department of Homeland Security’s Cybersecurity and Infrastructure Security Agency (CISA) says it’s already on the lookout for potential threats related to generative AI.

“Foreign adversaries have targeted U.S. elections and election infrastructure in previous elections cycles, and we expect these threats, to include the threat of foreign influence operations and disinformation, to continue in 2024,” Cait Conley, senior adviser to the director of CISA, said in a statement to Yahoo Finance.

“CISA provides state and local election officials and the public with guidance on foreign adversary tactics and mitigations, including addressing risks to election infrastructure from generative AI,” she added.

Stopping generative AI before it can influence elections

The problem with generative AI is that there are often few means of easily identifying whether content is made up or not. The technology has improved so quickly that it’s gone from generating images of people with 15 fingers to producing lifelike photos.

Last July, the Biden administration already secured voluntary commitments from Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI to manage potential risks posed by AI, but there’s nothing forcing the companies to abide by those agreements.

“I think we probably will see bipartisan support at the federal level to create legislation to address this,” Augusta’s Hunter said. “Especially surrounding political campaigns, where that content can be taken down as soon as possible.”

Social media companies including Meta, TikTok, and X could also help prevent the proliferation of phony media intended to trick voters. That could include anything from clear labels identifying when something has been created using generative AI to a ban on AI-generated content.

More robust detection tools could also help web users better recognize generative AI images, videos, or audio, ensuring they aren’t suckered in by a convincing fake. But so far, identification software isn’t entirely accurate.

“The detector tools are inconsistent. Some of them, frankly, are snake oil. They aren't typically geared to say certainly yes, certainly not,” explained Alexios Mantzarlis, director of the Security, Trust, and Safety Initiative at Cornell University.

“They're going to tell you there's an 85% chance. And that's good. That's helpful, but at moments of great polarization, that might not resolve something.”

But with the election fast approaching and generative AI advancing by leaps and bounds, there may not be enough time to prevent bad actors from taking advantage of technology to spread chaos online before voters begin casting their ballots. Whether that remains the case for the 2028 elections, however, is unclear.

@DanielHowley .

For the latest earnings reports and analysis, earnings whispers and expectations, and company earnings news, click here

Read the latest financial and business news from Yahoo Fin ance.